How to Run Word Count program in Eclipse with screenshot

With reference to my earlier post related to Hadoop Developer Self Learning Outline.

I am going to write short and simple tutorial on Map Reduce example

In this, Hadoop Quiz is going to cover Word Count Example

Pre requisite To run this example

1) Java installed and configured

2) Eclipse

(I assume, Java, Hadoop is installed and configured on your system)

Lets start with eclipse.

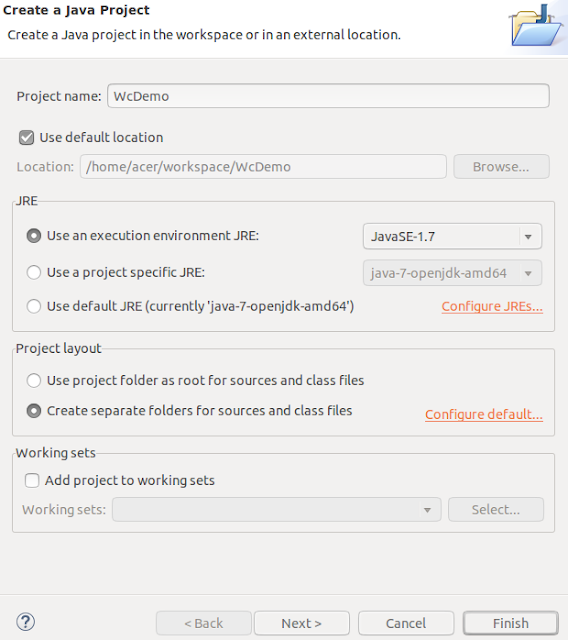

Step 1: Create a Java Project in Eclipse.

WcDemo is project name

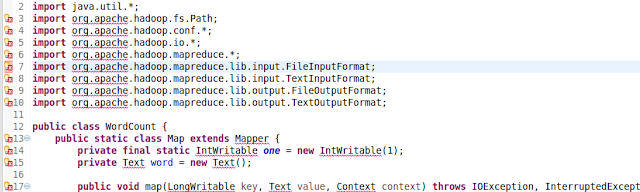

Step 2: Create class WordCount. (Class name)

Paste this code

//Packages

import java.io.IOException;

import java.util.*;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.conf.*;

import org.apache.hadoop.io.*;

import org.apache.hadoop.mapreduce.*;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

public class WordCount {

//Mapper Class

public static class Map extends Mapper<LongWritable, Text, Text, IntWritable> {

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line = value.toString();

StringTokenizer tokenizer = new StringTokenizer(line);

while (tokenizer.hasMoreTokens()) {

word.set(tokenizer.nextToken());

context.write(word, one);

}

}

}

//Reducer Class

public static class Reduce extends Reducer<Text, IntWritable, Text, IntWritable> {

public void reduce(Text key, Iterable<IntWritable> values, Context context)

throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

context.write(key, new IntWritable(sum));

}

}

//Driver Class

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = new Job(conf, "wordcount");

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class);

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.waitForCompletion(true);

}

}

So many errors hold on now we need to resolve dependencies. we have to add all dependencies jar in the code

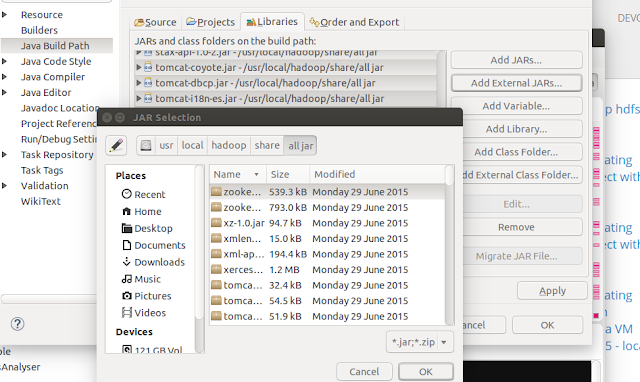

Step 3: Add Dependencies JARs

Right click on project properties and select Java build path

add all jars from $HADOOP_HOME/lib and $HADOOP_HOME (where hadoop core and tools jar lives) for me I have copied all Jar in one folder and added it here.

O/P

Step 4:Exporting the Jar

Now we want to export it as a jar. Right click on WordCount project and select "Export...":

Exporting the Jar, Now we want to export it as a jar. Right click on WordCount project and select "Export...":

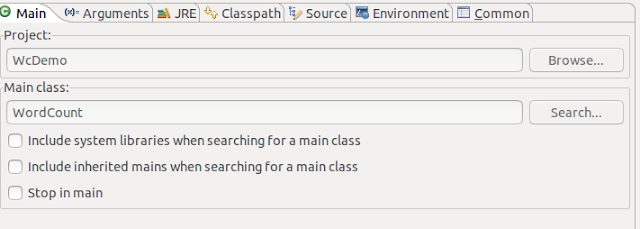

enter path to store jar and after clicking next mention the main class name (If you dont like to mention it in HDFS CLI).

Provide input and output path and run below command to get the output same as above.

hadoop jar /home/cloudera/WordCount.jar WordCount input/wordcount.txt output

to see the output:

hadoop fs -ls output

A hadoop Blog: Blog Link FB Page:Hadoop Quiz

Comment for update or changes..

Reference;

Definitive guide 4th edition, Cloudera and hadoop blogs.

I am going to write short and simple tutorial on Map Reduce example

In this, Hadoop Quiz is going to cover Word Count Example

Pre requisite To run this example

1) Java installed and configured

2) Eclipse

(I assume, Java, Hadoop is installed and configured on your system)

Lets start with eclipse.

Step 1: Create a Java Project in Eclipse.

Paste this code

//Packages

import java.io.IOException;

import java.util.*;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.conf.*;

import org.apache.hadoop.io.*;

import org.apache.hadoop.mapreduce.*;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

public class WordCount {

//Mapper Class

public static class Map extends Mapper<LongWritable, Text, Text, IntWritable> {

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line = value.toString();

StringTokenizer tokenizer = new StringTokenizer(line);

while (tokenizer.hasMoreTokens()) {

word.set(tokenizer.nextToken());

context.write(word, one);

}

}

}

//Reducer Class

public static class Reduce extends Reducer<Text, IntWritable, Text, IntWritable> {

public void reduce(Text key, Iterable<IntWritable> values, Context context)

throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

context.write(key, new IntWritable(sum));

}

}

//Driver Class

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = new Job(conf, "wordcount");

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class);

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.waitForCompletion(true);

}

}

So many errors hold on now we need to resolve dependencies. we have to add all dependencies jar in the code

Step 3: Add Dependencies JARs

Right click on project properties and select Java build path

add all jars from $HADOOP_HOME/lib and $HADOOP_HOME (where hadoop core and tools jar lives) for me I have copied all Jar in one folder and added it here.

Step 4: Set Input and Output

We need to set input file that will be used during Map phase and the final output will be generated in output directory by Reduct task. Edit Run Configuration and supply command line arguments. sample.txt reside in the project root. Your project explorer should contain following.

Select project name and browse main method name, adding maid method in eclipse will be helpful while running the same in CLI.

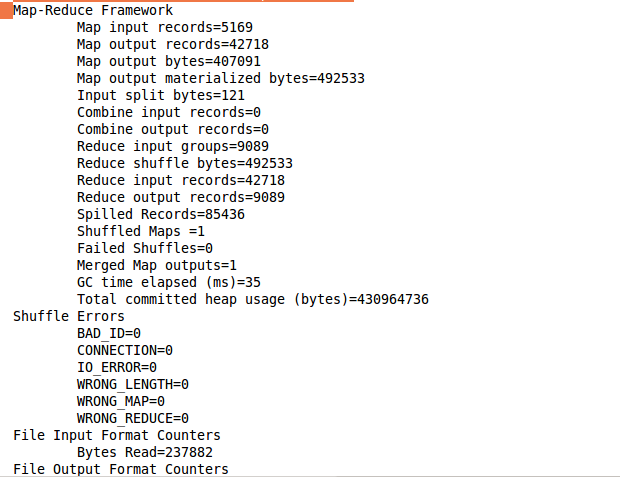

O/P

File System Counters

FILE: Number of bytes read=1461218

FILE: Number of bytes written=2115777

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=5169

Map output records=42718

Map output bytes=407091

Map output materialized bytes=492533

Input split bytes=121

Combine input records=0

Combine output records=0

Reduce input groups=9089

Reduce shuffle bytes=492533

Reduce input records=42718

Reduce output records=9089

Spilled Records=85436

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=35

Total committed heap usage (bytes)=430964736

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=237882

File Output Format Counters

Bytes Written=92142

O/P screenshot.

O/P screenshot.

Step 4:Exporting the Jar

Now we want to export it as a jar. Right click on WordCount project and select "Export...":

Exporting the Jar, Now we want to export it as a jar. Right click on WordCount project and select "Export...":

enter path to store jar and after clicking next mention the main class name (If you dont like to mention it in HDFS CLI).

Provide input and output path and run below command to get the output same as above.

hadoop jar /home/cloudera/WordCount.jar WordCount input/wordcount.txt output

to see the output:

hadoop fs -ls output

A hadoop Blog: Blog Link FB Page:Hadoop Quiz

Comment for update or changes..

Reference;

Definitive guide 4th edition, Cloudera and hadoop blogs.

Post a Comment

image video quote pre code